Prometheus-接入ETCD监控

Introduce

通过Prometheus监控ETCD数据,包括Grafana

Overview

1

2

3

4

5

6

7

|

curl http://<hostip>:2379/metrics

certs=/etc/kubernetes/pki/etcd/

curl --cacert $certs/ca.crt --cert $certs/peer.crt --key $certs/peer.key https://<hostip>:2379/metrics

|

Etcd 中的指标可以分为三大类:

Server

以下指标前缀为 etcd_server_。

- has_leader:集群是否存在 Leader

- leader_changes_seen_total:leader 切换数

- proposals_committed_total:已提交提案数

- 如果 member 和 leader 差距较大,说明 member 可能有问题,比如运行比较慢

- proposals_applied_total:已 apply 的提案数

- 已提交提案数和已 apply 提案数差值应该比较小,持续升高则说明 etcd 负载较高

- proposals_pending:等待提交的提案数

- 该值升高说明 etcd 负载较高或者 member 无法提交提案

- proposals_failed_total:失败的提案数

Disk

以下指标前缀为 etcd_disk_。

- wal_fsync_duration_seconds_bucket:反映系统执行 fdatasync 系统调用耗时

- backend_commit_duration_seconds_bucket:提交耗时

Network

以下指标前缀为etcd_network_。

- peer_sent_bytes_total:发送到其他 member 的字节数

- peer_received_bytes_total:从其他 member 收到的字节数

- peer_sent_failures_total: 发送失败数

- peer_received_failures_total:接收失败数

- peer_round_trip_time_seconds:节点间的 RTT

- client_grpc_sent_bytes_total:发送给客户端的字节数

- client_grpc_received_bytes_total:从客户端收到的字节数

核心指标

上面就是 etcd 的所有指标了,其中比较核心的是下面这几个:

- **

etcd_server_has_leader**:etcd 集群是否存在 leader,为 0 则表示不存在 leader,整个集群不可用

- **

etcd_server_leader_changes_seen_total**: etcd 集群累计 leader 切换次数

- **

etcd_disk_wal_fsync_duration_seconds_bucket**:wal fsync 调用耗时,正常应该低于 10ms

- **

etcd_disk_backend_commit_duration_seconds_bucket**:db fsync 调用耗时,正常应该低于 120ms

- **

etcd_network_peer_round_trip_time_seconds_bucket**:节点间 RTT 时间

Deploy

1⃣️配置Prometheus

1.创建 etcd service & endpoint

对于外置的 etcd 集群,或者以静态 pod 方式启动的 etcd 集群,都不会在 k8s 里创建 service,而 Prometheus 需要根据 service + endpoint 来抓取,因此需要手动创建

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

| cat > etcd-svc-ep.yaml << EOF

apiVersion: v1

kind: Endpoints

metadata:

labels:

app: etcd-prom

name: etcd-prom

namespace: kube-system

subsets:

- addresses:

# etcd 节点 ip

- ip: 192.168.10.41

- ip: 192.168.10.55

- ip: 192.168.10.83

ports:

- name: https-metrics

port: 2379 # etcd 端口

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

labels:

app: etcd-prom

name: etcd-prom

namespace: kube-system

spec:

ports:

# 记住这个 port-name,后续会用到

- name: https-metrics

port: 2379

protocol: TCP

targetPort: 2379

type: ClusterIP

EOF

kubectl apply -f etcd-svc-ep.yaml

|

2.创建 secret 存储证书

1

2

3

| certs=/etc/kubernetes/pki/etcd/

kubectl -n monitoring create secret generic etcd-ssl --from-file=ca=$certs/ca.crt --from-file=cert=$certs/peer.crt --from-file=key=$certs/peer.key

|

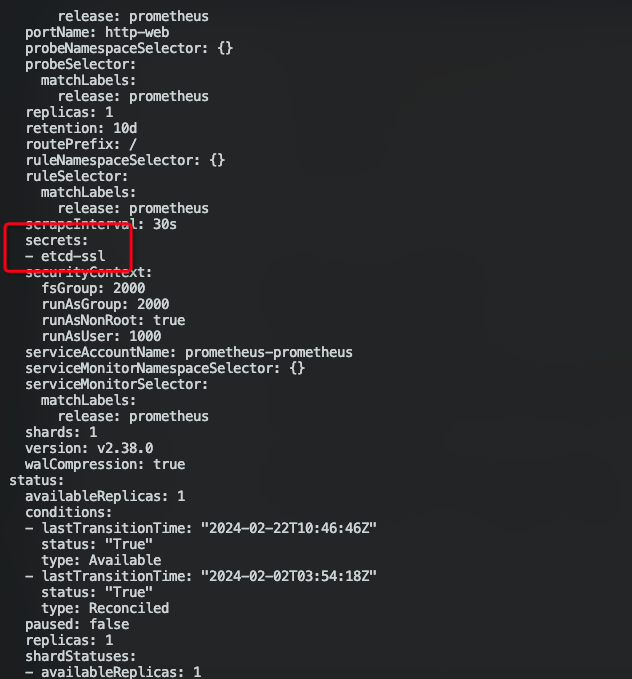

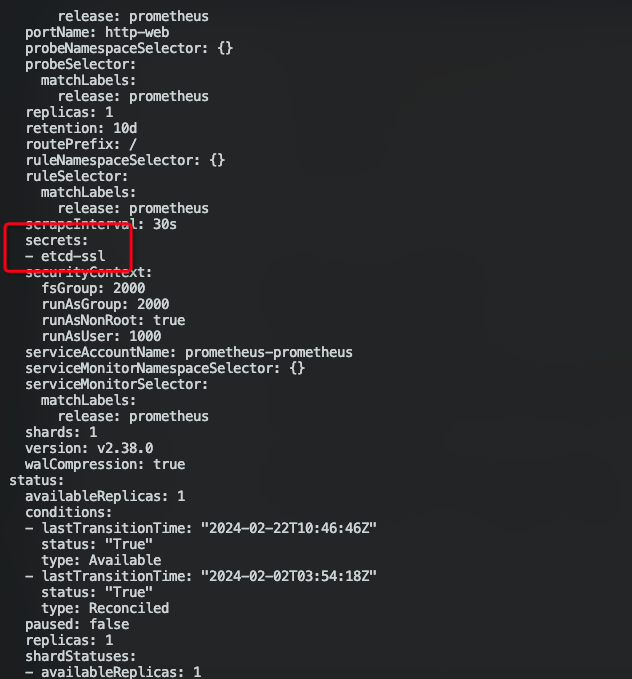

3.将证书挂载至 Prometheus 容器

由于 Prometheus 是 operator 方式部署的,所以只需要修改 prometheus 对象

1

| kubectl -n monitoring edit prometheus prometheus

|

4.创建 Etcd ServiceMonitor

接下来则是创建一个 ServiceMonitor 对象,让 Prometheus 去采集 etcd 的指标

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

| cat > etcd-sm.yaml << EOF

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: etcd

namespace: monitoring

labels:

app: etcd

# 主要添加prometheus 匹配ServiceMonitor是否匹配

release: prometheus

spec:

jobLabel: k8s-app

endpoints:

- interval: 30s

port: https-metrics #这个 port 对应 etcd-svc 的 spec.ports.name

scheme: https

tlsConfig:

caFile: /etc/prometheus/secrets/etcd-ssl/ca #证书路径

certFile: /etc/prometheus/secrets/etcd-ssl/cert

keyFile: /etc/prometheus/secrets/etcd-ssl/key

insecureSkipVerify: true # 关闭证书校验

selector:

matchLabels:

app: etcd-prom # 跟 etcd-svc 的 lables 保持一致

namespaceSelector:

matchNames:

- kube-system

EOF

kubectl -n monitoring apply -f etcd-sm.yaml

|

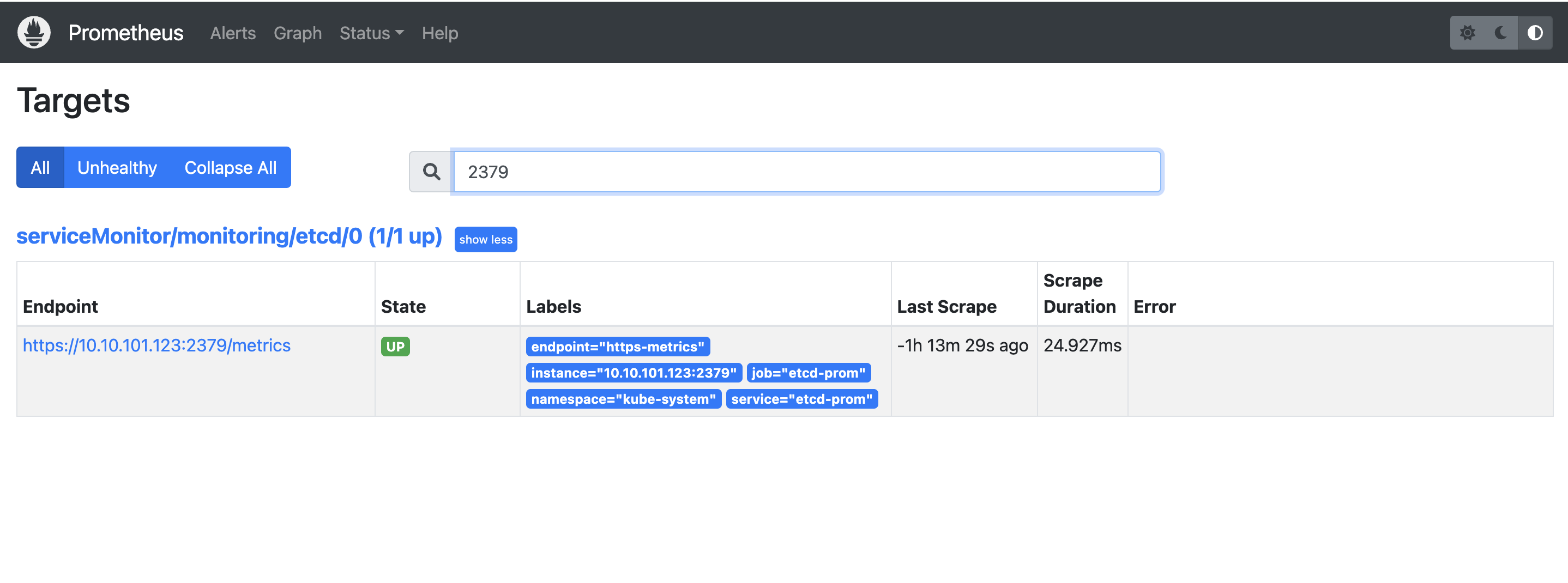

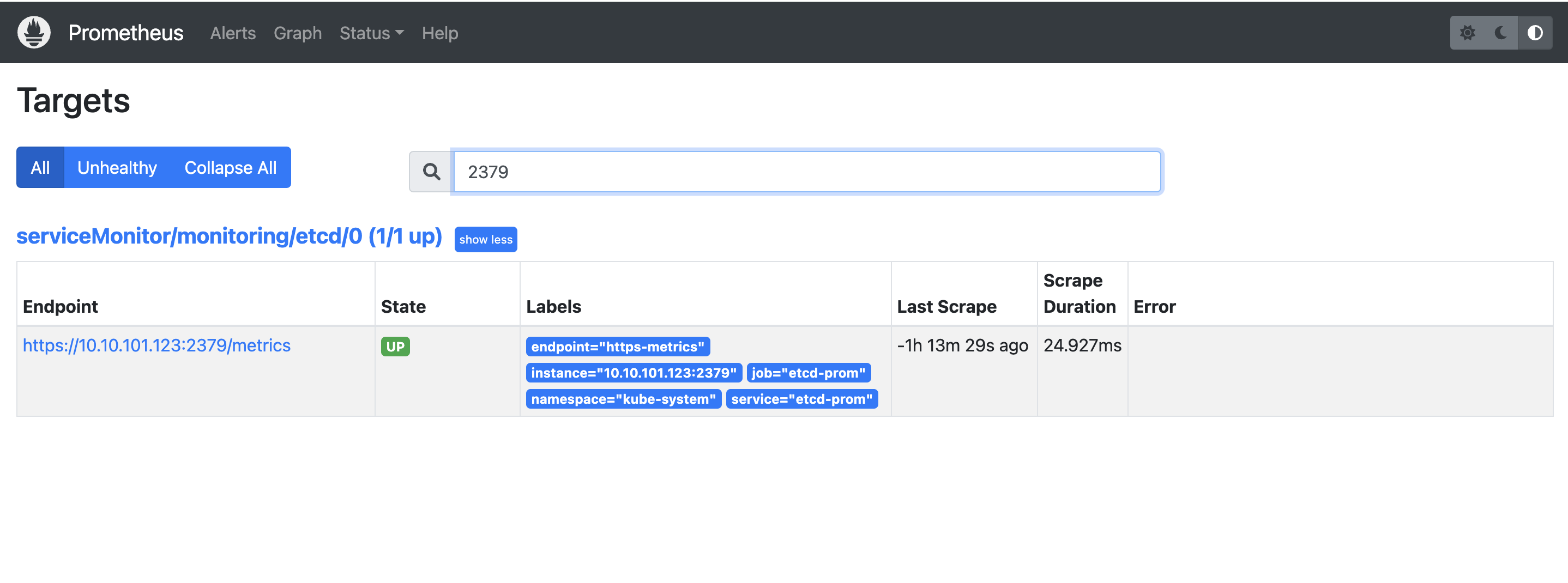

在Prometheus中就能看见这个2379的Target

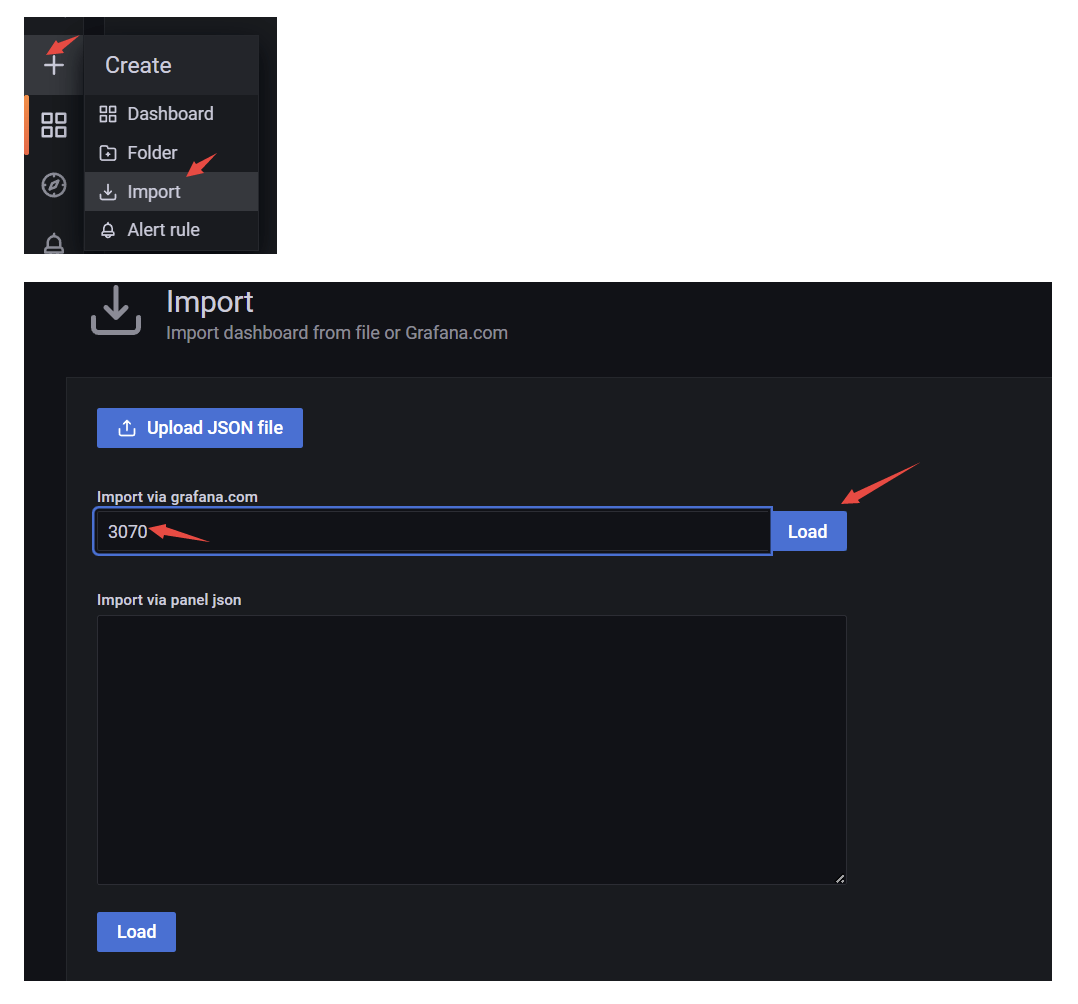

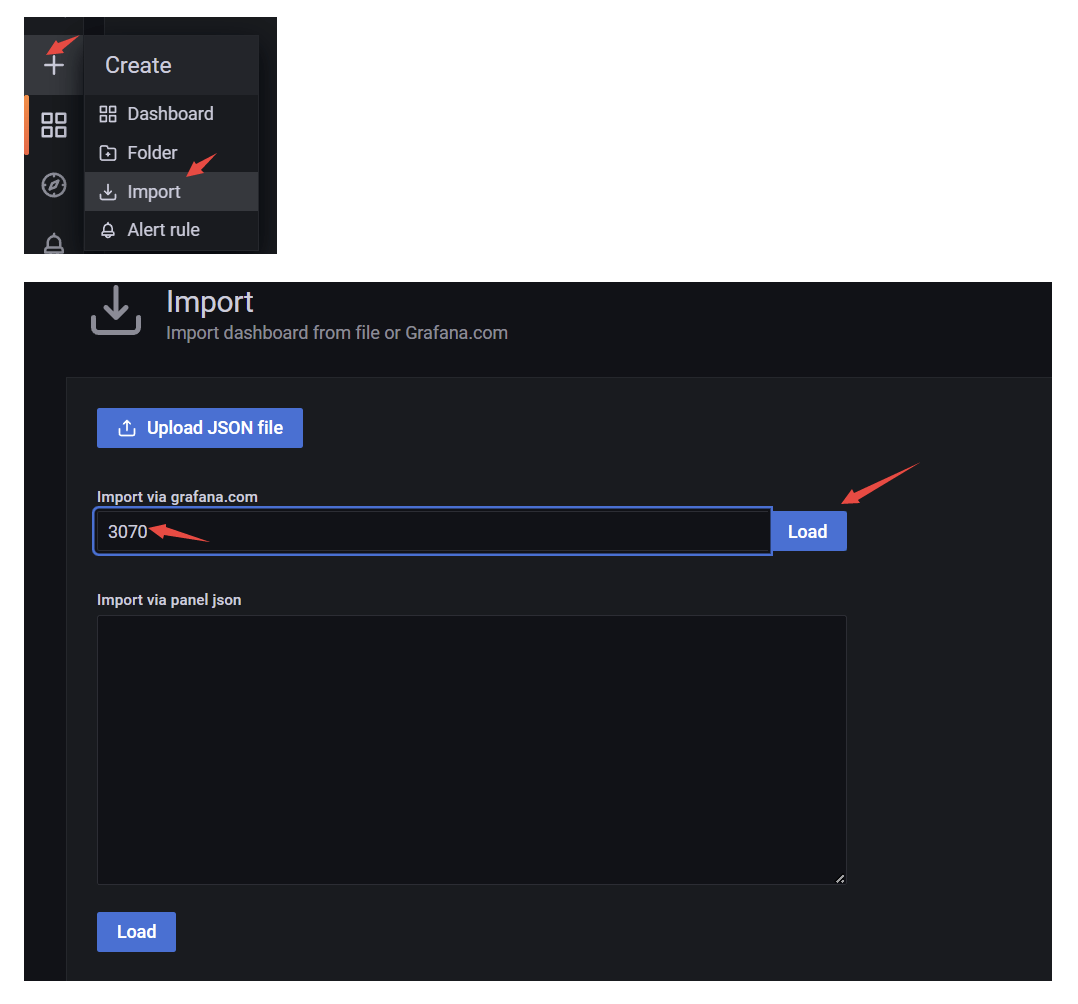

2⃣️导入Grafana

效果:

3⃣️配置告警

对于部分核心指标,建议配置到告警规则里,这样出问题时能及时发现

官方:etcd3_alert.rules.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

|

groups:

- name: etcd

rules:

- alert: etcdInsufficientMembers

annotations:

message: 'etcd cluster "{{ $labels.job }}": insufficient members ({{ $value

}}).'

expr: |

sum(up{job=~".*etcd.*"} == bool 1) by (job) < ((count(up{job=~".*etcd.*"}) by (job) + 1) / 2)

for: 3m

labels:

severity: critical

- alert: etcdNoLeader

annotations:

message: 'etcd cluster "{{ $labels.job }}": member {{ $labels.instance }} has

no leader.'

expr: |

etcd_server_has_leader{job=~".*etcd.*"} == 0

for: 1m

labels:

severity: critical

- alert: etcdHighNumberOfLeaderChanges

annotations:

message: 'etcd cluster "{{ $labels.job }}": instance {{ $labels.instance }}

has seen {{ $value }} leader changes within the last hour.'

expr: |

rate(etcd_server_leader_changes_seen_total{job=~".*etcd.*"}[15m]) > 3

for: 15m

labels:

severity: warning

- alert: etcdHighNumberOfFailedGRPCRequests

annotations:

message: 'etcd cluster "{{ $labels.job }}": {{ $value }}% of requests for {{

$labels.grpc_method }} failed on etcd instance {{ $labels.instance }}.'

expr: |

100 * sum(rate(grpc_server_handled_total{job=~".*etcd.*", grpc_code!="OK"}[5m])) BY (job, instance, grpc_service, grpc_method)

/

sum(rate(grpc_server_handled_total{job=~".*etcd.*"}[5m])) BY (job, instance, grpc_service, grpc_method)

> 1

for: 10m

labels:

severity: warning

- alert: etcdHighNumberOfFailedGRPCRequests

annotations:

message: 'etcd cluster "{{ $labels.job }}": {{ $value }}% of requests for {{

$labels.grpc_method }} failed on etcd instance {{ $labels.instance }}.'

expr: |

100 * sum(rate(grpc_server_handled_total{job=~".*etcd.*", grpc_code!="OK"}[5m])) BY (job, instance, grpc_service, grpc_method)

/

sum(rate(grpc_server_handled_total{job=~".*etcd.*"}[5m])) BY (job, instance, grpc_service, grpc_method)

> 5

for: 5m

labels:

severity: critical

- alert: etcdHighNumberOfFailedProposals

annotations:

message: 'etcd cluster "{{ $labels.job }}": {{ $value }} proposal failures within

the last hour on etcd instance {{ $labels.instance }}.'

expr: |

rate(etcd_server_proposals_failed_total{job=~".*etcd.*"}[15m]) > 5

for: 15m

labels:

severity: warning

- alert: etcdHighFsyncDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": 99th percentile fync durations are

{{ $value }}s on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99, rate(etcd_disk_wal_fsync_duration_seconds_bucket{job=~".*etcd.*"}[5m]))

> 0.5

for: 10m

labels:

severity: warning

- alert: etcdHighCommitDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": 99th percentile commit durations

{{ $value }}s on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99, rate(etcd_disk_backend_commit_duration_seconds_bucket{job=~".*etcd.*"}[5m]))

> 0.25

for: 10m

labels:

severity: warning

- alert: etcdHighNodeRTTDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": node RTT durations

{{ $value }}s on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99,rate(etcd_network_peer_round_trip_time_seconds_bucket[5m])) > 0.5

for: 10m

labels:

severity: warning

|

核心策略

实际上只需要对前面提到的几个核心指标配置上告警规则即可:

sum(up{job=~".\*etcd.\*"} == bool 1) by (job) < ((count(up{job=~".\*etcd.\*"}) by (job) + 1) / 2) : 当前存活的 etcd 节点数是否小于 (n+1)/2

- 集群中存活小于 (n+1)/2 那么整个集群都会不可用

etcd_server_has_leader{job=~".\*etcd.\*"} == 0:etcd 是否存在leader

- 为 0 则表示不存在 leader,同样整个集群不可用

rate(etcd_server_leader_changes_seen_total{job=~".\*etcd.\*"}[15m]) > 3: 15 分钟 内集群leader切换次数是否超过 3 次

histogram_quantile(0.99, rate(etcd_disk_wal_fsync_duration_seconds_bucket{job=~".\*etcd.\*"}[5m])) > 0.5:5 分钟内 WAL fsync 调用延迟 p99 大于 500ms- **

histogram_quantile(0.99,rate(etcd_disk_backend_commit_duration_seconds_bucket{job=~".\*etcd.\*"}[5m])) > 0.25**:5 分钟内 DB fsync *调用延迟 p99 大于 500**ms*

histogram_quantile(0.99,rate(etcd_network_peer_round_trip_time_seconds_bucket[5m])) > 0.5: 5 分钟内 节点之间 RTT 大于 500 ms

Yaml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

groups:

- name: etcd

rules:

- alert: etcdInsufficientMembers

annotations:

message: 'etcd cluster "{{ $labels.job }}": insufficient members ({{ $value

}}).'

expr: |

sum(up{job=~".*etcd.*"} == bool 1) by (job) < ((count(up{job=~".*etcd.*"}) by (job) + 1) / 2)

for: 3m

labels:

severity: critical

- alert: etcdNoLeader

annotations:

message: 'etcd cluster "{{ $labels.job }}": member {{ $labels.instance }} has

no leader.'

expr: |

etcd_server_has_leader{job=~".*etcd.*"} == 0

for: 1m

labels:

severity: critical

- alert: etcdHighFsyncDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": 99th percentile fync durations are

{{ $value }}s on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99, rate(etcd_disk_wal_fsync_duration_seconds_bucket{job=~".*etcd.*"}[5m]))

> 0.5

for: 10m

labels:

severity: warning

- alert: etcdHighCommitDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": 99th percentile commit durations

{{ $value }}s on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99, rate(etcd_disk_backend_commit_duration_seconds_bucket{job=~".*etcd.*"}[5m]))

> 0.25

for: 10m

labels:

severity: warning

- alert: etcdHighNodeRTTDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": node RTT durations

{{ $value }}s on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99,rate(etcd_network_peer_round_trip_time_seconds_bucket[5m])) > 0.5

for: 10m

labels:

severity: warning

|

导入到Prometheus

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

| cat > pr.yaml << "EOF"

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: etcd-rules

namespace: monitoring

spec:

groups:

- name: etcd

rules:

- alert: etcdInsufficientMembers

annotations:

message: 'etcd cluster "{{ $labels.job }}": insufficient members ({{ $value }}).'

expr: |

sum(up{job=~".*etcd.*"} == bool 1) by (job) < ((count(up{job=~".*etcd.*"}) by (job) + 1) / 2)

for: 3m

labels:

severity: critical

- alert: etcdNoLeader

annotations:

message: 'etcd cluster "{{ $labels.job }}": member {{ $labels.instance }} has no leader.'

expr: |

etcd_server_has_leader{job=~".*etcd.*"} == 0

for: 1m

labels:

severity: critical

- alert: etcdHighFsyncDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": 99th percentile fsync durations are {{ $value }}s(normal is < 10ms) on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99, rate(etcd_disk_wal_fsync_duration_seconds_bucket{job=~".*etcd.*"}[5m])) > 0.5

for: 3m

labels:

severity: warning

- alert: etcdHighCommitDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": 99th percentile commit durations {{ $value }}s(normal is < 120ms) on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99, rate(etcd_disk_backend_commit_duration_seconds_bucket{job=~".*etcd.*"}[5m])) > 0.25

for: 3m

labels:

severity: warning

- alert: etcdHighNodeRTTDurations

annotations:

message: 'etcd cluster "{{ $labels.job }}": node RTT durations {{ $value }}s on etcd instance {{ $labels.instance }}.'

expr: |

histogram_quantile(0.99, rate(etcd_network_peer_round_trip_time_seconds_bucket[5m])) > 0.5

for: 3m

labels:

severity: warning

EOF

kubectl -n monitoring apply -f pr.yaml

|

配置prometheus:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| kubectl -n monitoring get prometheus

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: example

spec:

serviceAccountName: prometheus

replicas: 2

alerting:

alertmanagers:

- namespace: default

name: alertmanager-example

port: web

serviceMonitorSelector:

matchLabels:

team: frontend

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

ruleNamespaceSelector:

matchLabels:

team: frontend

|