K8s核心资源对象-配置和存储资源

K8s核心资源对象-配置和存储资源

基于1.25

卷

什么是卷

容器中的文件在磁盘上是临时存放,Pod重启数据可能就会消息,卷就是为了实现持久化而出现

卷的类型

emptyDir卷

当Pod被分配到某个节点上的时候,emptyDir卷会被创建

- Pod在节点运行中,emptyDir卷一直存在

- emptyDir卷最初是空的

- emptyDir卷在Pod在该节点被删除之后,也会消失

hostPath卷

能把主机节点系统上的文件目录挂载到Pod

configMap卷

提供向Pod注入ConfigMap的方法

- ConfigMap对象中存储的数据可以被ConfigMap卷引用,之后被Pod中运行的容器化应用使用

downwardAPI卷

为应用服务器提供DownwardAPI数据

- 允许容器在不使用K8s客户端的情况下,获得到自己或者集群的信息

secret卷

用来给Pod传递一些敏感的信息

- 可以通过文件的形式挂到Pod中

nfs卷

把NFS挂到Pod中

- nfs数据不会随着Pod消减,而消减

cephfs卷

允许把现存的cephfs卷挂载到Pod中

- cephfs卷数据不随着Pod消减,而消减

persistentVolumeCliam卷

用来给持久卷(PV)挂载到Pod中

- 不会随着Pod消减,而消减

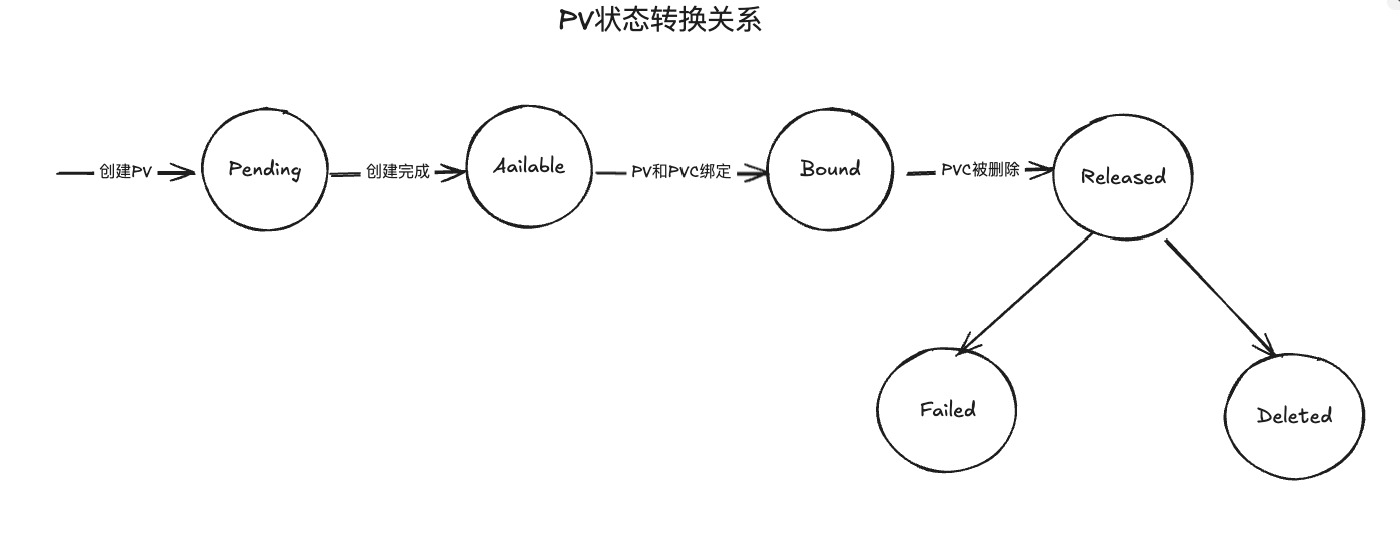

卷的状态

- Available:可用,空闲资源,等待使用

- Bound:已经绑定,卷已经被某处使用

- Released:已经释放,绑定消失,还没有被集群回收

- Failed:失败,卷的自动回收操作失败

PV和PVC

什么是PV和PVC

PV是集群中的一块存储资源

PVC是对集群存储资源的申请

PersistentVolumeSpec

// PersistentVolumeSpec is the specification of a persistent volume. |

PersistentVolumeClaimSpec

-

// PersistentVolumeClaimSpec describes the common attributes of storage devices

// and allows a Source for provider-specific attributes

type PersistentVolumeClaimSpec struct {

// accessModes contains the desired access modes the volume should have.

// More info: https://kubernetes.io/docs/concepts/storage/persistent-volumes#access-modes-1

// +optional

// 访问模式

AccessModes []PersistentVolumeAccessMode `json:"accessModes,omitempty" protobuf:"bytes,1,rep,name=accessModes,casttype=PersistentVolumeAccessMode"`

// selector is a label query over volumes to consider for binding.

// +optional

// 选择绑定的卷

Selector *metav1.LabelSelector `json:"selector,omitempty" protobuf:"bytes,4,opt,name=selector"`

// resources represents the minimum resources the volume should have.

// If RecoverVolumeExpansionFailure feature is enabled users are allowed to specify resource requirements

// that are lower than previous value but must still be higher than capacity recorded in the

// status field of the claim.

// More info: https://kubernetes.io/docs/concepts/storage/persistent-volumes#resources

// +optional

// 最小资源配置

Resources ResourceRequirements `json:"resources,omitempty" protobuf:"bytes,2,opt,name=resources"`

// volumeName is the binding reference to the PersistentVolume backing this claim.

// +optional

// 与PVC绑定的PV名称

VolumeName string `json:"volumeName,omitempty" protobuf:"bytes,3,opt,name=volumeName"`

// storageClassName is the name of the StorageClass required by the claim.

// More info: https://kubernetes.io/docs/concepts/storage/persistent-volumes#class-1

// +optional

// StorageClass名称

StorageClassName *string `json:"storageClassName,omitempty" protobuf:"bytes,5,opt,name=storageClassName"`

// volumeMode defines what type of volume is required by the claim.

// Value of Filesystem is implied when not included in claim spec.

// +optional

// 同PV的VolumeMode

VolumeMode *PersistentVolumeMode `json:"volumeMode,omitempty" protobuf:"bytes,6,opt,name=volumeMode,casttype=PersistentVolumeMode"`

// dataSource field can be used to specify either:

// * An existing VolumeSnapshot object (snapshot.storage.k8s.io/VolumeSnapshot)

// * An existing PVC (PersistentVolumeClaim)

// If the provisioner or an external controller can support the specified data source,

// it will create a new volume based on the contents of the specified data source.

// If the AnyVolumeDataSource feature gate is enabled, this field will always have

// the same contents as the DataSourceRef field.

// +optional

// 用于实现存储快照

// 只用CSI支持配置

DataSource *TypedLocalObjectReference `json:"dataSource,omitempty" protobuf:"bytes,7,opt,name=dataSource"`

// dataSourceRef specifies the object from which to populate the volume with data, if a non-empty

// volume is desired. This may be any local object from a non-empty API group (non

// core object) or a PersistentVolumeClaim object.

// When this field is specified, volume binding will only succeed if the type of

// the specified object matches some installed volume populator or dynamic

// provisioner.

// This field will replace the functionality of the DataSource field and as such

// if both fields are non-empty, they must have the same value. For backwards

// compatibility, both fields (DataSource and DataSourceRef) will be set to the same

// value automatically if one of them is empty and the other is non-empty.

// There are two important differences between DataSource and DataSourceRef:

// * While DataSource only allows two specific types of objects, DataSourceRef

// allows any non-core object, as well as PersistentVolumeClaim objects.

// * While DataSource ignores disallowed values (dropping them), DataSourceRef

// preserves all values, and generates an error if a disallowed value is

// specified.

// (Beta) Using this field requires the AnyVolumeDataSource feature gate to be enabled.

// +optional

// 指定数据源对象引用

DataSourceRef *TypedLocalObjectReference `json:"dataSourceRef,omitempty" protobuf:"bytes,8,opt,name=dataSourceRef"`

}PV类型

PV使用插件实现,下面是K8s集中常见的实现:

- cephfs:cephfs卷

- csi:容器存储实现接口

- fc:Fibre Channel实现

- hostPath:hostPath单节点使用

- iscsi:iSCSI实现

- local:节点上挂着本地存储设备

- nfs:网络文件系统NFS实现

- rbd:Rados块设备实现

PV访问模式

PV可以使用资源提供者所支持的任何方式到宿主系统上

访问模式有以下几种:

- ReadWriteOnce:卷可以被一个节点以只读方式挂载

- 也允许运行在同一个节点的多个Pod访问卷

- ReadOnlyMany:卷可以被多个节点以只读方式挂载

- ReadWriteMany:卷可以被多个节点以只读方式挂载

- ReadWriteOncePod:卷可以被单个节点以读写的方式挂载

- 确保整个集群只有一个Pod读写该PV(在K8s 1.22b版本以上)

PV回收策略

- Retain(默认策略):手动回收

- Recycle:废弃,不再推荐使用

- Delete:直接删除, 需要插件支持

reclaim.Volume函数负责实现volume.Spec.PersistentVolumeReclaimPolicy并启动适当的回收操作,具体流程如下:

如果PV有

pv.kubernetes.io/migrated.io注解,表示PV已经迁移,PV控制器停止,外部的CSI处理这个PV获取`volume.Spec.PersistentVolumeReclaimPolicy处理PV

Retain策略:打印日志,不做处理

Recycle策略:调用

recycleVolumeOperation处理,内部调用RecyclableVolumePlugin的Recycle方法Delete策略,调用

deleteVolumeOperation处理,内部调用DeleteVolumePlugin方法

// reclaimVolume implements volume.Spec.PersistentVolumeReclaimPolicy and |

PV状态流转

- Released状态的PV无法变成Available状态去绑定心的PVC

- 需要复用PV有俩种方法

- 创建一个新的PV,把原有的PV信息赋值给新PV

- 删除Pod,不删除PVC,下次直接复用PVC

PVC和PV的匹配流程

PV控制器使用findBestMatchForCliam实现PV和PVC匹配,内部调用findByClaim,具体流程如下:

- 根据访问模式匹配

- 过滤一些规则

- 已经绑定的排除

- PVC请求大于PV排除

- 卷模式不同的排除

- 标记删除的PV排除

- 非Available状态PV排除

- PVC标签选择不匹配PV排除

- StroageClass不匹配的排除

- 节点亲和不匹配的排除

- 匹配

pvc request storage和pv capacity最匹配的

// find returns the nearest PV from the ordered list or nil if a match is not found |

StorageClass

StorageClass实现了动态创建PV,解决手动创建PV不方便的问题

StorageClass

// StorageClass describes the parameters for a class of storage for |

Provisioner

一些常见的Provisioner

- CephFS:开源Ceph文件存储

- Cinder:OpenStack存储

- NFS:开源分布式存储

- RBD:开源Ceph块存储

- Locla:本地卷还不支持动态制备,需要创建StorageClass

PV、PVC、StorageClas的关系

- PVC控制器监听PV和PVC的创建

- PV控制器监听到PVC创建,根据信息的StorageClass信息,组合完整的PV

- 调用Client-go中,把PV保存到K8s

- PV控制器监听到PV创建,根据配置的存储实现参数真正创建存储卷并且挂载

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 Joohwan!

评论