containerd-CRI Overview CRI定义了容器和镜像服务的接口,使用Protocol Buffer协议

定义了kubelet与不同容器运行时交互规范

接口包含客户端与服务端

1 2 3 kubectl xx --containerd-runtime-endpoint=<CRI Server的Uninx Socket> --image-service-endpont=< CRI Serverdr的Uninx Socket>

如果Runtime Service和Image Service在一个gPRC Server中要配置container-runtime-endpoint,当image-service-endpoint为空,默认使用container-runtime-endpoint一致的地址

如果是K8s 1.24以前使用CRI Server需要设置container-runtime=remote(自从kubelet移除了dockershim,这个参数废弃,默认container-runtime=docker

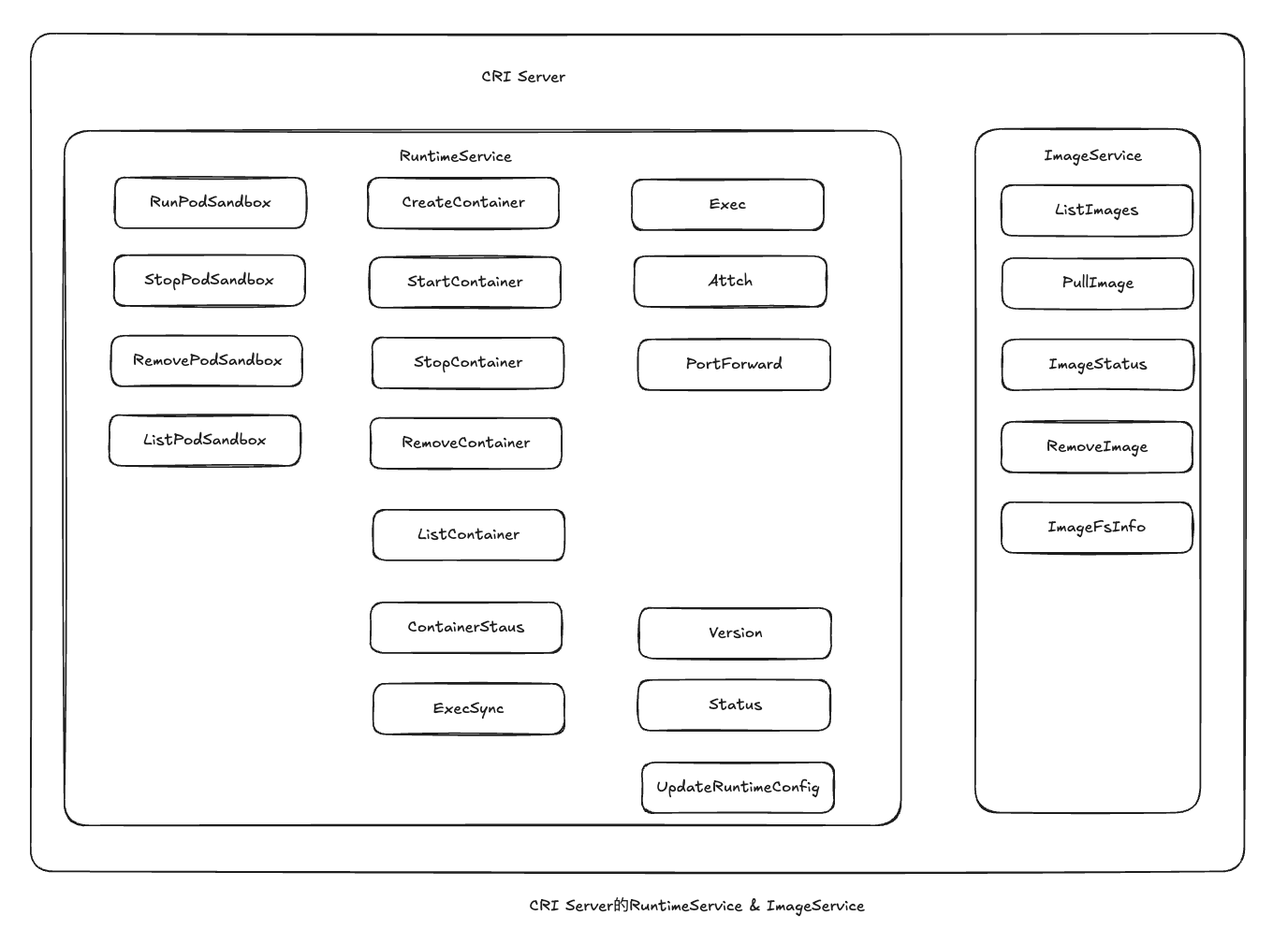

RuntimeService Runtime Service主要负责Pod以及container的生命周期管理,包含四大类

PodSandbox管理,和k8s中pod一一对应,为pod提供隔离环境,准备需要的基础网络设施。在runc下,对应一个pause容器,在kata或者firecracker中对应一台虚拟机

container管理,用于上述中Sandbox中管理容器的生命周期,如创建、启动、销毁容器

Streaming API:用于kubelet进行Exec、Attach、PortForward交互,该类接口返回给kubelet的是Streaming Server的Endpoint,用于接收后续kubelte的Attach、PortForward请求

Runtime接口:主要查询该CRI Server的状态,如CRI、CNI状态,以及更新PodCIDR配置

Runtime Service 接口描述

分类

方法

说明

sandbox相关

RunPodSandbox

启动Pod沙箱功能,包含pod网络基础设施

sandbox相关

StopPodSandbox

停止sandbox的相关进程,回收网络基础设施;该操作幂等;kubelet在调用RemovePodSandbox之前至少调用一次StopPodSandbox

sandbox相关

RemovePod Sandbox

删除sandbox,以及sandbox内的相关容器

sandbox相关

PodSandboxStatus

返回PodSandbox的状态

sandbox相关

ListPodSandbox

获取PodSandbox列表

container相关

CreateContainer

在指定的sandbox中创建新的container

container相关

StopContainer

在一定的时间内(timeout)停止一个正在运行的container;操作幂等;在超过grace period之后,必须强制清理该container,该操作幂等

container相关

RemoveContainer

清理container,如果container正在运行,则强制清理该container;该操作幂等

container相关

ListContainers

通过filter获取所有的container

container相关

ContainerStatus

获取container的状态,如果container不存在,则报错

container相关

UpdateContainerResources

更新container的ContainerConfig

container相关

ContainerStats

获取container的统计数据,如CPU、内存

container相关

ListContainerStats

获取所有运行container的统计数据

runtime相关

UpdateRuntimeConfig

更新runtime的配置,当前containerd只支持处理PodCIDR的变更

runtime相关

Status

获取runtime的状态(CRI+CNI状态),只要CRI Plugin能正常响应,则CRI为Ready,CNI要看CNI插件的状态

runtime相关

Version

获取runtime的名称、版本、API版本等

container管理

ReopenContainerLog

ReopenContainerLog会情趣runtime重新打开container的stdout/stderr;通常会在日志文件被rotate之后被调用,如果container没在运行,则runtime会创建一个新的log file或返回nil,或者返回error(返回error的情况下 ,不会插件log file)

container管理

ExecSync

在container内同步执行一个命令

Streaming API

Exec

准备一个Streaming endpoint,在container执行一个命令,会连接到容器,可以像ssh一样进入容器,进行操作,可以通过exit退出容器,不影响容器运行

Streaming API

Attach

咋办一个Streaming endpoint连接准备的container,会通过连接stdin,连接到容器内输入/输出,会在输入exit后终止进程

Streaming API

PortForward

准备一个Streaming ednpoint来转发到container中的端口,如kubectl port- forward pods/xxx 10000:8080 将本地端口10000转发到容器内8080

ImageService ImageService比较简答,主要是运行容器的几个镜像接口

ImageService接口定义

分类

方法

说明

镜像相关

ListImages

列出当前存在的镜像

镜像相关

ImageStatus

返回镜像的状态,如果不存在,则ImageStatusResponse.Image为nil

镜像相关

PullImage

返回认证信息拉取镜像

镜像相关

RemoveImage

移除镜像,该操作是幂等

镜像相关

ImageFsInfo

返回存储镜像所用的文件系统

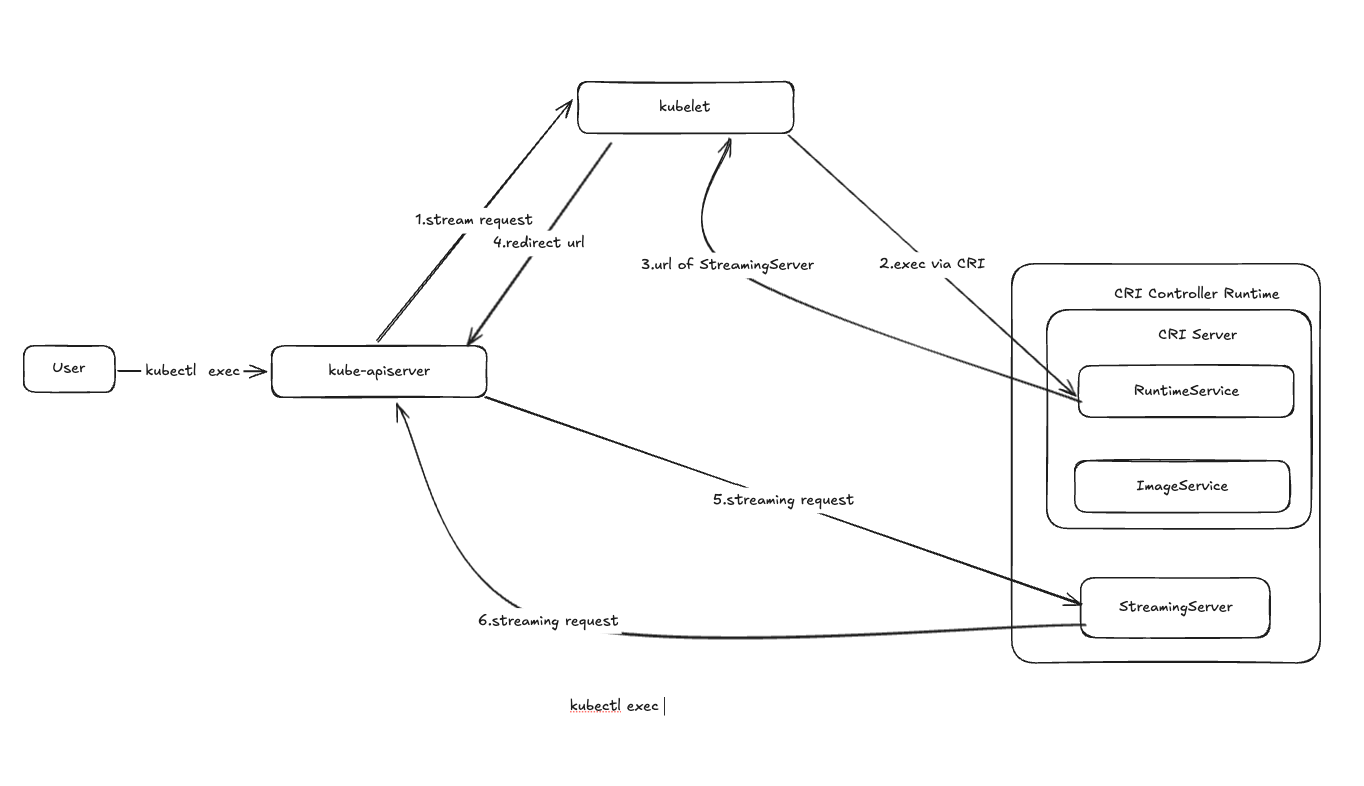

kubectl exec命令主要有以下的几个步骤:

kubectl发送POST请求给kube-apiserver,请求路径为“/api/v1/namespaces///exec?xxx

Kube-apiserver向kubectl发送流失请求,kubectl通过CRI向CRI Server调用exec func

CRI Server返回Streaming Server的url地址给kubelet

kubelet返回给kube-apiserver重定向响应,将请求重定向到Streaming Server的url

kube-apiserver重定向请求到Streaming Server的url

Streaming Server响应该请求,注意StreamingServer会返回呀i个HTTP协议升级的响应为kube-apiserver,告诉kube-apiserver切换到SPDY协议

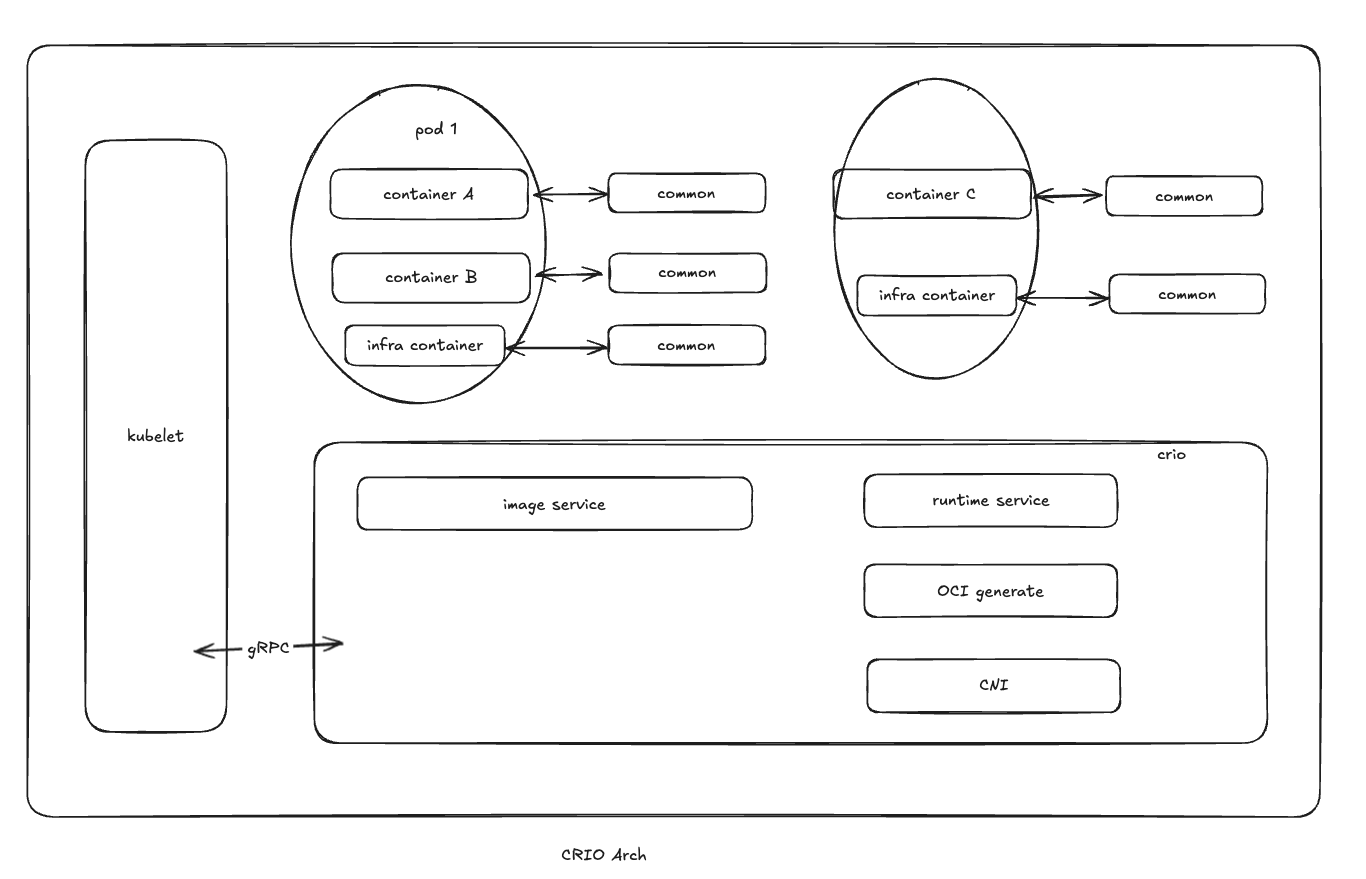

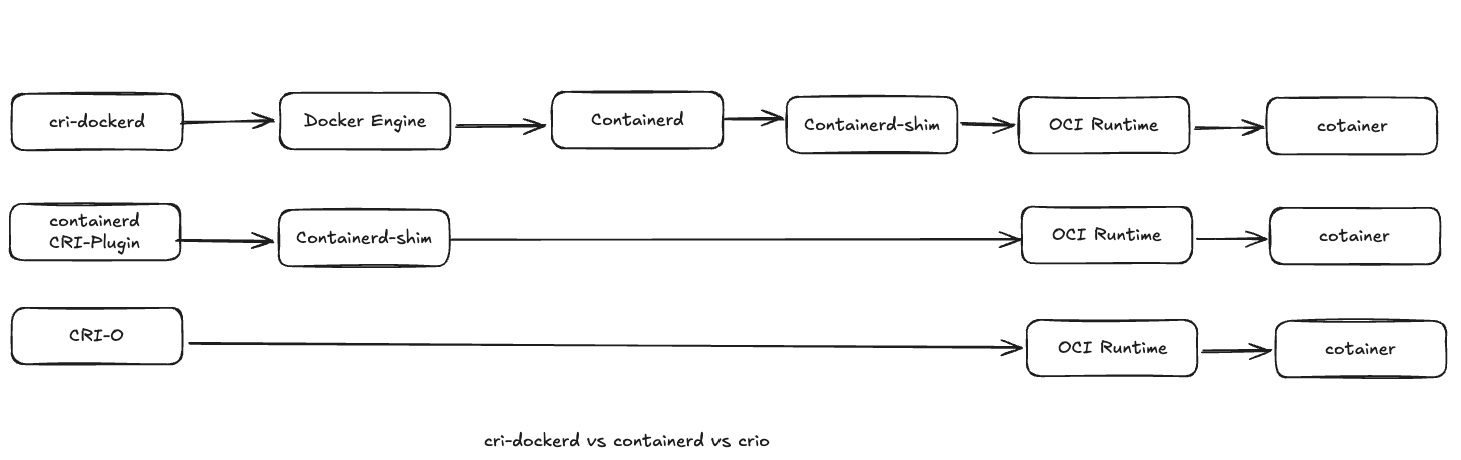

几种CRI实现以及概述 crio

crio具体实现流程如下:

K8s通知kubelet启动一个pod

kubelet通过CRI将请求转发给crio daemon

crio-利用containers/image 从镜像参数拉取镜像

下载好的镜像被压缩到容器的根文件系统中,并且通过containers/storage存储到支持写的复制(COW)的文件系统中

在为容器创建rootfs之后,cri-o通过oci-runtime-tool(OCI组织提供)生成一个OCI运行时规范json文件

cri-o使用上述的运行时规范JSON文件启动一个兼容OCI规范的运行时来运行容器进程。默认的运行时runc,理论上支持OCI规范的各种运行时,如kata、gVisor等

每个容器都由一个独立的common进程监控,common为容器中pid为1的进程提供一个pty。同时,它还负责处理容器的日志记录并记录容器进程的退出代码

网络是通过CNI设置的,因此可以支持社区的多种CNI插件

相比Docker和containerd,cri-o仅仅为k8s设计,并且针对于K8s进行优化,调用链路最短

PouchConatiner PouchConatiner是阿里把开源的高效、轻量级、企业富容器级

PouchConatiner基于systemd管理业务进程

PouchConatiner实现的富容器相对于单进程,主要区别内部进程分为几类:

pid=1的init进程:富容器并没有将容器镜像汇总指定的CMD作为容器内pid=1的进程,而是支持了systemd、sbin/init、dumb-init等类型的init进程,而是更加管理内部的多进程服务。crond系统服务、syslogd系统服务

容器镜像的CMD:容器镜像的CMD代表业务应用,是整个富容器的核心部分,在富容器内通过systemd启动该业务应用

容器内系统service进程:很对传统业务开发长期依赖裸金属

用户自定义运维组件:除了系统服务,企业运维团队还需要对基础设施配置自定义运维组件

fireacker-containerd fireacker-containerd 是AWS基于containerd开源的一个支持Firecracker的CRI项目,而Firecracker是AWS开源的一个轻量化虚拟机管理

轻量化

启动速度快,极简设备模型。Firecracker没有BIOS和PCI,甚至不需要设备直通

密度高:内存开销低。Firecraker每个MicroVM约为3MB

水平拓展:Firecraker微虚拟机可以在每个主机上以每秒150个实例速度拓展

超卖率

virtlet virtlet是退出的基于K8s管理虚拟机方案,virtlet实现了一套VM的CRI和contianerd与kubelet交互,不需要额外的空hi走起,virtlet借助pod的注解表达更多的VM信息

containerd和CRI Plugin pod启动容器时,CRI Plugin的工作流程:

kubelet通过CRI调用CRI Plugin中的RunSandbox API,创建Pod对应的Sandbox环境

插件Pod Sandbox时,CRI Plugin会创建Pod网络命名空间,然后通过CNI配置容器网络,之后会为Sandbox创建并启动一个特殊的容器,Pause容器,然后把容器加入到上述网络命名空间

创建完PodSandbox之后,kubelet调用CRI Plugin的ImageService API拉取容器镜像,如果node上不存在该镜像,则CRI Plugin会调用coantainerd接口去拉取镜像

kubelet利用刚刚拉取的镜像调用CRI Plugin的Runtime Service API,在Pod Sandbox中创建启动容器

CRI Plugin最终通过containerd client skd调用containerd的接口创建容器,并在pod所在的Cgroups和namespace中启动容器

重要配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 root@iZbp1ebizftw2vpbpm737wZ ~]# containerd config default version = 3 root = '/var/lib/containerd' state = '/run/containerd' temp = '' plugin_dir = '' disabled_plugins = [] required_plugins = [] oom_score = 0 imports = [] [grpc] address = '/run/containerd/containerd.sock' tcp_address = '' tcp_tls_ca = '' tcp_tls_cert = '' tcp_tls_key = '' uid = 0 gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 [ttrpc] address = '' uid = 0 gid = 0 [debug] address = '' uid = 0 gid = 0 level = '' format = '' [metrics] address = '' grpc_histogram = false [plugins] [plugins.'io.containerd.cri.v1.images'] snapshotter = 'overlayfs' disable_snapshot_annotations = true discard_unpacked_layers = false max_concurrent_downloads = 3 image_pull_progress_timeout = '5m0s' image_pull_with_sync_fs = false stats_collect_period = 10 [plugins.'io.containerd.cri.v1.images'.pinned_images] sandbox = 'registry.k8s.io/pause:3.10' [plugins.'io.containerd.cri.v1.images'.registry] config_path = '' [plugins.'io.containerd.cri.v1.images'.image_decryption] key_model = 'node' [plugins.'io.containerd.cri.v1.runtime'] enable_selinux = false selinux_category_range = 1024 max_container_log_line_size = 16384 disable_apparmor = false restrict_oom_score_adj = false disable_proc_mount = false unset_seccomp_profile = '' tolerate_missing_hugetlb_controller = true disable_hugetlb_controller = true device_ownership_from_security_context = false ignore_image_defined_volumes = false netns_mounts_under_state_dir = false enable_unprivileged_ports = true enable_unprivileged_icmp = true enable_cdi = true cdi_spec_dirs = ['/etc/cdi', '/var/run/cdi'] drain_exec_sync_io_timeout = '0s' ignore_deprecation_warnings = [] [plugins.'io.containerd.cri.v1.runtime'.containerd] default_runtime_name = 'runc' ignore_blockio_not_enabled_errors = false ignore_rdt_not_enabled_errors = false [plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes] [plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes.runc] runtime_type = 'io.containerd.runc.v2' runtime_path = '' pod_annotations = [] container_annotations = [] privileged_without_host_devices = false privileged_without_host_devices_all_devices_allowed = false base_runtime_spec = '' cni_conf_dir = '' cni_max_conf_num = 0 snapshotter = '' sandboxer = 'podsandbox' io_type = '' [plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes.runc.options] BinaryName = '' CriuImagePath = '' CriuWorkPath = '' IoGid = 0 IoUid = 0 NoNewKeyring = false Root = '' ShimCgroup = '' [plugins.'io.containerd.cri.v1.runtime'.cni] bin_dir = '/opt/cni/bin' conf_dir = '/etc/cni/net.d' max_conf_num = 1 setup_serially = false conf_template = '' ip_pref = '' use_internal_loopback = false [plugins.'io.containerd.gc.v1.scheduler'] pause_threshold = 0.02 deletion_threshold = 0 mutation_threshold = 100 schedule_delay = '0s' startup_delay = '100ms' [plugins.'io.containerd.grpc.v1.cri'] disable_tcp_service = true stream_server_address = '127.0.0.1' stream_server_port = '0' stream_idle_timeout = '4h0m0s' enable_tls_streaming = false [plugins.'io.containerd.grpc.v1.cri'.x509_key_pair_streaming] tls_cert_file = '' tls_key_file = '' [plugins.'io.containerd.image-verifier.v1.bindir'] bin_dir = '/opt/containerd/image-verifier/bin' max_verifiers = 10 per_verifier_timeout = '10s' [plugins.'io.containerd.internal.v1.opt'] path = '/opt/containerd' [plugins.'io.containerd.internal.v1.tracing'] [plugins.'io.containerd.metadata.v1.bolt'] content_sharing_policy = 'shared' [plugins.'io.containerd.monitor.container.v1.restart'] interval = '10s' [plugins.'io.containerd.monitor.task.v1.cgroups'] no_prometheus = false [plugins.'io.containerd.nri.v1.nri'] disable = false socket_path = '/var/run/nri/nri.sock' plugin_path = '/opt/nri/plugins' plugin_config_path = '/etc/nri/conf.d' plugin_registration_timeout = '5s' plugin_request_timeout = '2s' disable_connections = false [plugins.'io.containerd.runtime.v2.task'] platforms = ['linux/amd64'] [plugins.'io.containerd.service.v1.diff-service'] default = ['walking'] sync_fs = false [plugins.'io.containerd.service.v1.tasks-service'] blockio_config_file = '' rdt_config_file = '' [plugins.'io.containerd.shim.v1.manager'] env = [] [plugins.'io.containerd.snapshotter.v1.blockfile'] root_path = '' scratch_file = '' fs_type = '' mount_options = [] recreate_scratch = false [plugins.'io.containerd.snapshotter.v1.btrfs'] root_path = '' [plugins.'io.containerd.snapshotter.v1.devmapper'] root_path = '' pool_name = '' base_image_size = '' async_remove = false discard_blocks = false fs_type = '' fs_options = '' [plugins.'io.containerd.snapshotter.v1.native'] root_path = '' [plugins.'io.containerd.snapshotter.v1.overlayfs'] root_path = '' upperdir_label = false sync_remove = false slow_chown = false mount_options = [] [plugins.'io.containerd.snapshotter.v1.zfs'] root_path = '' [plugins.'io.containerd.tracing.processor.v1.otlp'] [plugins.'io.containerd.transfer.v1.local'] max_concurrent_downloads = 3 max_concurrent_uploaded_layers = 3 config_path = '' [cgroup] path = '' [timeouts] 'io.containerd.timeout.bolt.open' = '0s' 'io.containerd.timeout.metrics.shimstats' = '2s' 'io.containerd.timeout.shim.cleanup' = '5s' 'io.containerd.timeout.shim.load' = '5s' 'io.containerd.timeout.shim.shutdown' = '3s' 'io.containerd.timeout.task.state' = '2s' [stream_processors] [stream_processors.'io.containerd.ocicrypt.decoder.v1.tar'] accepts = ['application/vnd.oci.image.layer.v1.tar+encrypted'] returns = 'application/vnd.oci.image.layer.v1.tar' path = 'ctd-decoder' args = ['--decryption-keys-path', '/etc/containerd/ocicrypt/keys'] env = ['OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf'] [stream_processors.'io.containerd.ocicrypt.decoder.v1.tar.gzip'] accepts = ['application/vnd.oci.image.layer.v1.tar+gzip+encrypted'] returns = 'application/vnd.oci.image.layer.v1.tar+gzip' path = 'ctd-decoder' args = ['--decryption-keys-path', '/etc/containerd/ocicrypt/keys'] env = ['OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf'] [root@iZbp1ebizftw2vpbpm737wZ ~]#

CRI Plugin的全局配置项在[plugins.’io.containerd.grpc.v1.cri’]中,按照模块分

cgroup Driver配置

尽管containerd和K8s默认都适用cgroupfs管理cgroup,但是基于一个系统采用一个cgroup 管理器,推荐生产中使用systemd

配置containerd cgroup驱动(以runc)

1 2 3 version=2 [plugin.'io.containerd.grpc.v1.cri'.conntainerd.runtime.runc.options] SystemdCgroup=true

配置kubelet cgroup驱动

除了container的驱动,在k8s中还需要配置kubelet驱动

KubeletConfiguration默认位置/var/lib/kubelet/config.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 bash: /var/lib/kubelet/config.yaml: Permission denied [root@hcss-ecs-5425 ~ ] apiVersion: kubelet.config.k8s.io/v1beta1 authentication: anonymous: enabled: false webhook: cacheTTL: 0s enabled: true x509: clientCAFile: /etc/kubernetes/pki/ca.crt authorization: mode: Webhook webhook: cacheAuthorizedTTL: 0s cacheUnauthorizedTTL: 0s cgroupDriver: systemd

在1.22之后。不设置cgroupDriver,kubeadm默认systemd

snapshotter配置 snapshotter是containerd为容器准备rootfs的存储插件

RuntimeClass配置 RuntimeClass是一种内置的资源,在K8sv1.14中

使用RuntimeClass可以为不同的pod选择不同的容器运行时,以提供安全性与性能

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 [root@hcss-ecs-5425 ~]# kubectl explain runtimeclass KIND: RuntimeClass VERSION: node.k8s.io/v1 DESCRIPTION: RuntimeClass defines a class of container runtime supported in the cluster. The RuntimeClass is used to determine which container runtime is used to run all containers in a pod. RuntimeClasses are manually defined by a user or cluster provisioner, and referenced in the PodSpec. The Kubelet is responsible for resolving the RuntimeClassName reference before running the pod. For more details, see https://kubernetes.io/docs/concepts/containers/runtime-class/ FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources handler <string> -required- Handler specifies the underlying runtime and configuration that the CRI implementation will use to handle pods of this class. The possible values are specific to the node & CRI configuration. It is assumed that all handlers are available on every node, and handlers of the same name are equivalent on every node. For example, a handler called "runc" might specify that the runc OCI runtime (using native Linux containers) will be used to run the containers in a pod. The Handler must be lowercase, conform to the DNS Label (RFC 1123) requirements, and is immutable. kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds metadata <Object> More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata overhead <Object> Overhead represents the resource overhead associated with running a pod for a given RuntimeClass. For more details, see https://kubernetes.io/docs/concepts/scheduling-eviction/pod-overhead/ This field is in beta starting v1.18 and is only honored by servers that enable the PodOverhead feature. scheduling <Object> Scheduling holds the scheduling constraints to ensure that pods running with this RuntimeClass are scheduled to nodes that support it. If scheduling is nil, this RuntimeClass is assumed to be supported by all nodes.

如果没有配置RuntimeClass,containerd默认handler使用runc(还支持crun\kata\gvisor)

1 2 3 4 [plugins.'io.containerd.cri.v1.runtime'.containerd] default_runtime_name = 'runc' ignore_blockio_not_enabled_errors = false ignore_rdt_not_enabled_errors = false

除了containerd还需要为K8s集群配置和创建RuntimeClass才能自定义

1 2 3 4 5 apiVersion: node.k8s.io/v1 kind: RuntimeClass metadata: name: crun handler: crun

pod引用某个容器运行时,通过runtimeClassName指定

1 2 3 4 apiVersion: v1 kind: Pod spec: runtimeClassName: crun

镜像仓库配置 在containerd1.5之后。配置项中为ctr、containerd image服务的客户端以及CRI的客户端增加了配置镜像仓库的能力

在containerd中可以为每个镜像仓库指定一个hosts.toml配置文件完成对镜像仓库的配置,如使用证书、mirror镜像仓库等

1 2 [plugins.'io.containerd.cri.v1.images'.registry] config_path = ''

配置私有仓库:

1 2 3 4 5 6 7 8 9 10 11 12 [plugins."io.containerd.grpc.v1.cri".registry] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://registry-1.docker.io"] //到此为配置文件默认生成,之后为需要添加的内容 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."192.168.66.4"] endpoint = ["https://192.168.66.4:443"] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.66.4".tls] insecure_skip_verify = true [plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.66.4".auth] username = "admin" password = "Harbor12345"

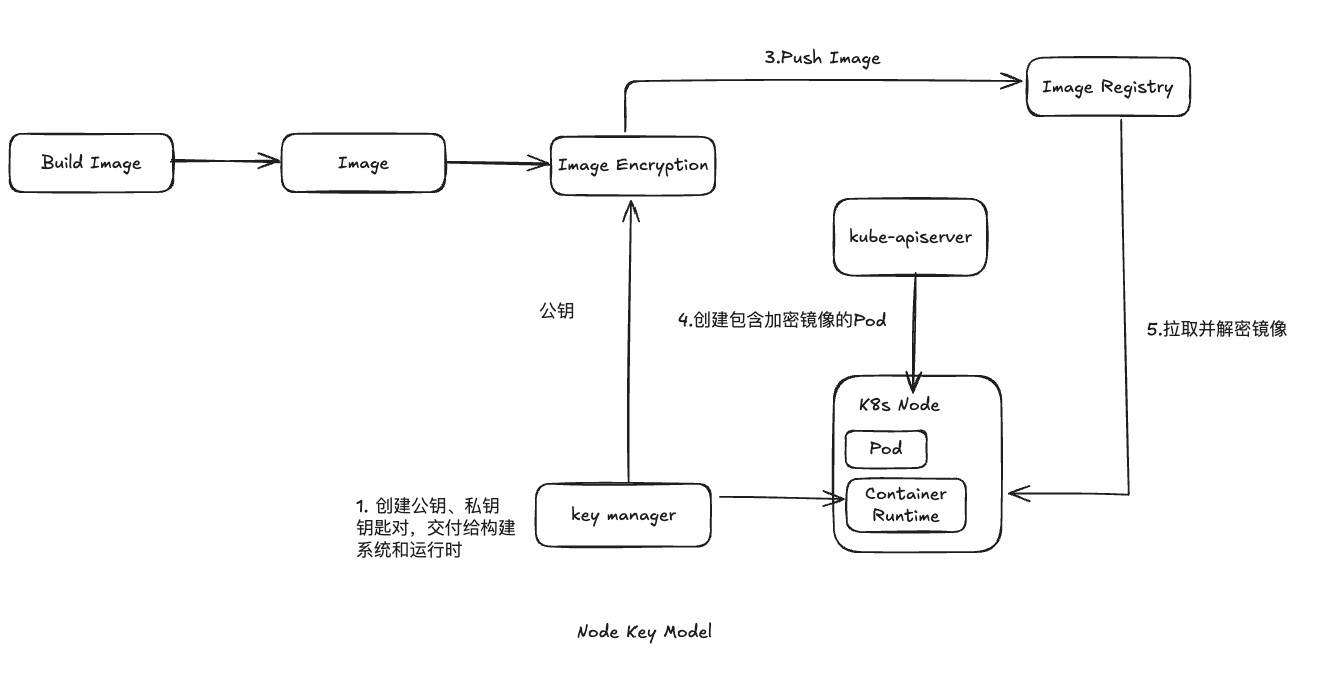

镜像解密配置 OCI镜像规范中,一个镜像是由多层镜像层构成的,镜像层可以通过加密机制来加密机密数据或代码,防止未经授权的访问

OIC镜像加密主要是在原来的OCI镜像规范基础上,添加了一种新的mediaType,表示数据被加密;同时在annotation中添加具体加密相关信息

K8s中的containerd加密:

K8s生态的镜像加密/解密

K8s支持俩种镜像解密模式:

Node Key Model:将密钥放在K8s工作节点上,以节点为粒度实现解密

1 2 [plugins.'io.containerd.cri.v1.images'.image_decryption] key_model = 'node'

Mulit-tenancy Key Model:多租户模式,以集群粒度实现解密(当前社区未实现)

containerd中的stream_processor

stream_processor是containerd中一种基于内容流的二进制API

传入内容流通过STDN传递给对应的二进制文件,经过二进制处理后输出STDOUT到stream_processor

stream_processor是二进制的调用,相当于每层镜像都进行了unpiz操作

CNI配置 containerd中通过配置项[plugin.’io.containerd.grpc.vc.cri’.cni]对CNI进行配置,CNI支持配置的配置有:

bin_dir:指定CNI插件二进制所在的目录:如flannel、ipvlan、macvlan、host-local

conf_dir:CNI conf文件所在目录,默认为/etc/cni/net.d

max_conf_num:指定要从CNI配置目录加载的CNI插件配置文件的最大数量.

默认情况下,只会加载一个CNI配置插件

如果配置为0就会从conf_dir加载所有CNI插件配置文件

ip_pref:指定选择pod主IP地址时使用的策略,目前支持三种策略

IPv4:选择第一个IPv4地址,该策略是默认策略

IPv6:选择第一个IPv6地址

cni:使用CNI插件返回顺序,返回结果中的第一个IP地址

conf_templdate:该配置主要是kubenet用户(尚未在生产中CNI damonset的用户)提供的临时向后兼容

CNI配置全解